There is a lot of buzz around the word “AI” in Legal Tech at the moment. AI is portrayed as the number one trend in the legal industry and beyond, with a particular focus on its potentially disruptive nature. Consequently, many lawyers want to understand how AI might change or disrupt their profession. Some are already a step ahead posing the question how they could use it to reinvent their own law firms.

The hype (and sometimes hysteria) around AI at times seems to be based on an unrealistic picture of the capabilities of the current AI technology. AI seems to be almost magical. A technology we do not really understand that has the potential to heal all our problems and inherent human limitations. “But”, as Andrew Ng put it in a very interesting Harvard Business Review article, ‘it’s not magic“, Today’s AI is far away from being magical, or even a “strong AI” (or “full” AI). Strong AI describes a hypothetical machine that exhibits behavior at least as skillful and flexible as humans do. Instead, what we have today is “weak or narrow AI”. Weak AI is non-sentient AI that is focused on one narrow task. Weak AI can only be used in a limited context. Think a “chess AI” cannot be used for translation.

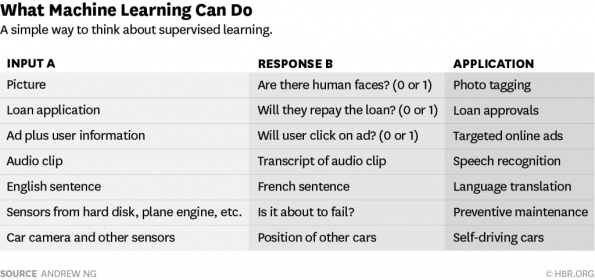

Let’s have a closer look and see what AI is able to do today:

At first glance, these types of AI seem to be relatively simple and somewhat limited. These examples of weak AI use some input data (A) to quickly generate some response (B). The technical term for this “input – output machine” is “supervised learning”. Supervised learning is the Machine Learning task of inferring a function from labeled training data (Wikipedia). Supervised learning has been improving rapidly in the last couple of years (In 2015 alone, technology companies spent $8.5 billion on deals and investments in artificial intelligence) and the most advanced are built on deep neural networks. But these systems still fall far short of strong AI.

There is a great challenge for companies developing AI based on supervised learning: It requires a huge amount of data. In order to train an AI-algorithm it needs to be fed with a lot of input – output training data. The training requires carefully choosing the input and output data. Otherwise the algorithm is not able to figure out the relationship between input and output in an accurate, non-biased way.

This training process is the real challenge that sets different AI providers apart. As AI algorithm might be reproducible with sufficiently deep pockets, it is extremely difficult to replicate training results as the training data is usually scarce. The competitive advantage comes with the best (in terms of size and variety) training data sets and the amount of time put into training. Again in the words of Andrew Ng, “data, rather than software, is the defensible barrier for many businesses.”

This means early adopters and “producers” of AI may have a competitive edge as they have sufficient time to allocate enough training data and thus to properly train the AI. Therefore, it can be dangerous to jump on the AI-bandwagon too late. Put differently, it may be a great chance for lawyers, law firms and legal departments to develop a weak AI in a lucrative niche. It will then be very difficult for copycats to reproduce the results in a short timeframe due to the lack the sufficient data.